share

The world has seen and witnessed the profound impact of Large Language Models (LLMs) like ChatGPT in understanding and generating text-based data. A similar disruption is now on the horizon for traditional time series forecasting.

The intriguing aspect of this transformation is that the same foundational transformer architecture used in LLMs is being applied to drive this shift in forecasting. These new models, often called Foundation Models, are no longer exclusively for language or text-based data.

Why This Shift Matters Now

For a long time, demand planning relied heavily on traditional statistical methods. While these methods were augmented by Machine Learning (ML) and deep learning in recent years, several pain points persist:

- Complexity & Time: Statistical models often need tuning and can take weeks to build.

- Pattern Struggles: Traditional methods struggle to accurately capture and model highly complex and non-linear demand patterns.

- External Signals: Incorporating external signals adds significant complexity and resource demands.

It is now time to understand how these advanced AI-trained models will fundamentally shape our approach to demand forecasting. Models like TimesFM are designed to directly address these pain points, simplifying the process for planning professionals.

Foundation Models: Pre-Trained for Predictions

Just like large LLMs were trained on massive volumes of data, these forecasting foundation models have been pre-trained on time-series data from diverse industries, including retail and automotive. This vast, cross-industry training gives them a critical advantage.

The Concept of Zero-Shot Forecasting

The term widely used to describe these powerful new models is “Zero-Shot Forecasting”.

This capability is significant because a planner doesn't need to invest extensive time and resources in training the model from scratch. Instead, they can directly achieve reliable prediction results based on the underlying input data alone.

This capability greatly reduces the barrier to entry and dramatically cuts down the implementation time for advanced forecasting solutions. Notably, these zero-shot models often match or outperform traditional supervised deep learning approaches and standard statistical methods.

Leading the Charge in Time Series AI

The following models are among those setting the standard and establishing footprints in this emerging field:

- Google's TimesFM

- Amazon Chronos

- Salesforce Moirai

- Nixtla's TimesGPT

Furthermore, research papers—such as "TIME-LLM: Time Series Forecasting by Reprogramming Large Language Models"—illustrate frameworks that repurpose existing LLMs for general time series forecasting. These models achieve this by transforming the time series data into text prototypes to align it with the natural language modality, ensuring the LLM backbone is not altered. Comprehensive evaluations show they achieve better accuracy than specialized forecasting models, particularly in few-shot and zero-shot learning settings.

Deep Dive: How TimesFM Works

TimesFM (Time Series Foundation Model) is an open-source model developed by Google Research, which uses a patched approach to respect the natural flow of time.

Patching and Tokenizing: Patching means instead of feeding the model every single time point (like every individual sales value or temperature reading), TimesFM groups consecutive data points into blocks called patches (for example, every 7 days for one patch if you pick a 7-day window). Each patch is converted into a “temporal token”—a vector representation summarizing both the values and their position in time.

- Example:

A daily sales series like [13, 15, 16, 14, 19, 18, 17, 20, 22, ...]

- With a patch size of 4:

[13,15,16,14], [19,18,17,20], [22,..., ...,...]

- Each patch becomes a token: Token1, Token2, Token3...

- Why this is done?

- This reduces memory and computation requirement.

- Aggregating values into such patches help the model grasp higher level trend and seasonality

- The sequential nature of this structure helps model to not loose information about order of the events.

Context Length: Context length refers to the maximum number of time points the model can “see” and use in its calculations for forecasting. While TimesFM is relatively compact compared to massive LLMs, its ability to handle context is crucial. The original model primarily used 512 time points/data points. Crucially, the newer TimesFM 2.5 model significantly increased the maximum supported context length to 16,384 time points.

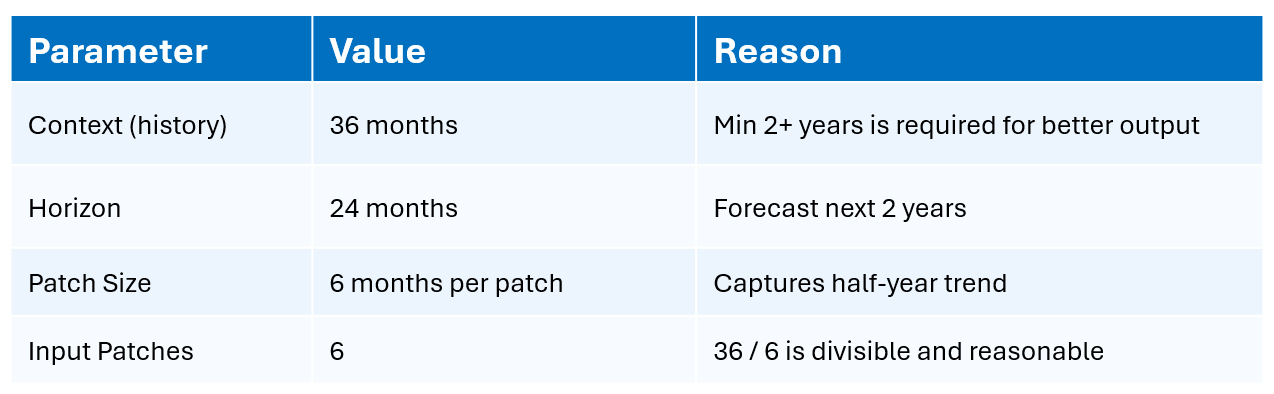

When using the model, planners specify a desired context (history) and a horizon (forecast length), with the main constraint being that these values should be multiples of the input patch length.

Note: Using a sufficient history length, up to 16k for TimesFM 2.5, helps leverage its full potential.

Parameter Summary

*The parameter set is just illustrative; In real time trail runs and POCs had to be done to arrive at the optimal setting based on data quality, industry, etc. TimesFM’s innovative architecture and user-friendly parameter settings make it a foundational tool for anyone who needs reliable, scalable time series forecasting in the modern world.

The Road Ahead: Simplified Orchestration

The integration of these Foundation Models marks a major strategic shift. The rise of Gen AI in this domain allows planners to shift their focus from time-consuming model building to strategic orchestration of data, people, and business strategy.

Foundation Models promise to simplify the underlying statistical and machine learning effort, allowing planning professionals to focus on the key insights and strategic decisions.

The core lesson remains: The future of forecasting is not about avoiding complexity, but about using powerful tools to simplify its management. With Foundation Models, the possible has now expanded dramatically.